On Thursdays at noon Yhouse holds a lunch meeting at the Institute of Advanced Study, in Princeton. The format is a 15 minute informal talk by a speaker followed by a longer open-ended discussion among the participants, triggered by, but not necessarily confined to, the topic of the talk. In order to share I am posting a synopsis of the weekly meetings.

Synopsis of Li Zhaoping’s Yhouse Luncheon talk 11/9/17

Presenter: Zhaoping Li (University College London)

Title: Looking And Seeing In Visual Functions Of The Brain

Abstract: "Vision is a window to the brain, and I will give a short introduction and demonstrate that it can be seen as mainly a problem of "looking and seeing", which are two separable processes in the brain. Understanding vision requires both experimental and theoretical approaches, and to study the brain using our own brains have its peculiar difficulties."

Present: Piet Hut, Olaf Witkowski, Yuko Ishihara, Arpita Tripathi, Li Zhaoping, Michael Solomon

We made introductions. Arpita is new to the group and reported she worked in public health in India before recently moving to Princeton. Li Zhaoping studied physics in China before getting her PhD in Brain Science at Cal Tech and coming to the IAS 27 years ago as a post doc working then on the olfactory system. She currently works at University College London on vision, with an emphasis on computer science and on artificial and natural intelligence.

She began with the statement that “The Eyes are the window to the Brain.” Although the visual information pathway begins with the eye, in the monkey one half of the volume of the brain is for vision. In humans one third of the brain is for vision. Piet asked, “How sure are we that these areas are not involved with other functions such as memory or thinking?” If we place an electrode in an area of the brain and find that neuron responds to visual and not to auditory or other stimuli, then we attribute that area to vision. Higher animals devote more of the brain to vision. Lower animals devote more to smell. Moving from the retina to the optic nerves from each eye, to the optic chiasma, we arrive at the subcortical center called V1 (visual one). Signals then travel to the superior colliculus, the occipital cortex in the posterior part of the brain, and then the frontal lobes become involved.

There have been some surprises in the study of vision. In the 1960s at MIT Gerald Sussman (now a professor at MIT and someone Piet knows well) spent a summer project trying to learn how, with input of a picture of, e.g., a glass and a dinner plate, you could construct a computer algorithm that outputs “plate and glass”. This is simple for humans to do, but not for computers. The computer “sees” pixels and numbers, not a plate and glass.

She showed two pictures of people boarding an airplane and asked us to tell what was different between the two. Most identified that the plane engine was missing in one picture, but it took some time to identify that, contrary to our impression that we see all details of the picture with our first glance. We are often blind to gross details. In 1953 Stephen Kuffler showed that in the retina neurons see features of a center distinct from the immediate surroundings (like a target) and send these neural signals to V1. If we put electrodes in the brain of an animal we can measure small activation potentials, in the order of 10 millivolts, when the animal is viewing an image on a screen. In the 1960s Hubel and Weisel studied V1 and found the neurons were not responding to image features – centers distinct from surround – that activate retinal neurons, but to small bars in the visual field. Some of the neurons responded to vertical bars, some to horizontal, some to moving bars, some to color, etc. They received the Nobel Prize for their work. In monkeys V1 is 12% of the neocortical volume. Since that work, however, experimental progress in understanding how V2, V3, or V4 work has not been as impressive. So, theorists are now looking at what happens in V1 and after. Somehow we gain object recognition and that recognition is rotationally invariant, i.e. we identify objects even when they are rotated in space.

She showed a display of oblique lines in four rows, with one line the mirror image of all the rest (////\//). It is relatively easy to pick out the different line. When she showed a similar display of X shaped lines with one X different (⌿⌿⍀⌿⌿), it was more difficult to find the different one. When she showed a display with 22 rows and 30 columns, it was much more difficult to find the different X. In an early experiment, she followed the eye movement of subjects viewing the displays. For most in their initial trials, the gaze travelled around the target within two seconds, then abandoned the target, and then returned. She described this as “Looking” and “Seeing”.

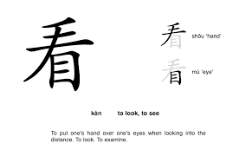

In her model of vision, we begin with Input and then move -> to Encoding, then -> to Selection, and then to -> (and from <-) Decoding. The Selection phase is Looking; the Decoding is Seeing. Looking is Attention selection; Seeing is Recognition. She drew the Chinese pictograms for Looking and Seeing and said these two characters mostly occur together in colloquial speaking. (See below at end of discussion.) Piet pointed out that the pictogram for look has the hand in it. The pictogram in Chinese for Look is the symbol for hand over the symbol for eye, as in hand palm down over eye for looking. The pictogram for seeing is the symbol for eye over the symbol for son (or man), as in son of the eye.

The human eye moves 3 times per second. The input from the retina is on the order of 20 frames per second, or about 20 megabytes/sec. This is much too much to interpret. Our attention bottleneck is about 40 bits/second, much less. If 20 megabytes is about one large book, then 40 bits is about two sentences. So, the brain chooses which 40 bits (two sentences) to focus on and deletes the rest. Traditional wisdom assumes that this selection occurs in the frontal part of the human brain (Koch & Ullman 1985, and Treisman 1980s). If the different bar is colored red, you see it right away. In humans, the frontal lobes are about 29% of the brain, in monkeys 17%, and in dogs 12.5%. For non-mammals, there is no frontal cortex. She showed a video of a frog confronted with moving pictures of bugs. The frog jumps at anything moving. The frog has no V1 or V2.

According to her Saliency Hypothesis, V1 creates a bottom-up saliency map to guide looking or gaze shifts. Hence, V2’s role should be seen in light of what V1 has done. The frog has a superior colliculus but no neocortex. In higher animals, the neocortex takes on the function of the superior colliculus and the superior colliculus shrinks dramatically. In her theory, the primary visual cortex, i.e. V1, of primates, creates the saliency map and sends it to the superior colliculus which executes the map by shifting gaze to the most salient location. She thinks that in lower animals the superior colliculus does both the saliency map creation and gaze shift execution. Piet asked if she was familiar with Rodney Brooks’ robots. These are cockroach like robots with layers of computer inputs, where each layer focuses on a single aspect of the visual field, and the robot navigates without constructing a map of the field. So, to see you must choose what to pay attention to. Even though we move our eyes three times per second, we are only aware of a tiny fraction of that input, about 10 times/minute, and our brain deletes the data input not selected by attention (which shifts by gaze shifts).

Piet noted that a similar model of focused attention is important in embodied cognition that Olaf is working on. Perhaps they could collaborate on a paper on this topic. Such interaction of different disciplines is just what Yhouse intends to promote.

In V1 nearby neurons activated by similar features (e.g. vertical, horizontal, red, moving right, etc.) tend to suppress each other. Hence a neuron responding to a vertical bar can have its response suppressed when there are other vertical bars nearby. Piet quoted, “Neurons that wire together, fire together.” Like-to-Like suppression occurs for vertical, horizontal, moving, or color reactive neurons. We can measure this by measuring the firing rate for specific neurons in V1. A map of this firing rates is sent to the superior colliculus, which shifts gaze to the location with the highest firing rate. A surprising prediction that helps to prove this theory is that invisible features attract attention. She showed an example to illustrate this. When different images are shown to the left and right eye, the brain combines them to provide a fused perception. If you start with an image of a grid of lines that has all forward slash lines and one backwards slash line (/////\/////), and you create one image that is a copy of this image except for one forward slash missing (// //\/////) and show it to the left eye, and you create another image that contains only the missing forward slash ( / ) and show it to the right eye, then the fused perception is the summation of the left eye and right eye images and hence is the original image (/////\/////). But when most people view this pair of images, their gaze moves first to the location of the forward slash in the right eye, even though they cannot tell the difference between this forward slash in the right eye from the other forward slashes in the left eye. The invisible feature – which eye is the origin of the visual input - draws attention. That was one of her first published experiments and has since been replicated by other groups. One of her present research projects involves measuring saccades, the series of small jerky movements of the eyes when changing focus from one point to another, to pop out targets in monkeys while measuring V1 neural responses.

Olaf said he was surprised to find that neurons in vision behave like swarms of animals, like ants or honeybees. He would not have expected similar algorithms to be at work for neurons for sight. Specifically, the process is bottom-up. An example is how birds flying in formation in a flock respond to avoid a predator. There is no top-down communication from a lead bird indicating all turn this way. That would be too slow, Instead, each bird moves in relation to a neighboring bird in the formation rapidly changing course to avoid the predator. Zhauping asked if these birds have Like-to-Like suppressors? Olaf responded that he had not looked at that. Each bird reacts to its neighbor just as each neuron reacts to its neighbor.

Regarding top-down control, Michael asked if she was familiar with Anton Syndrome (also called Anton Babinski Syndrome). This is a rare medical condition in which people who have suffered injuries resulting in loss of both the right and left occipital (visual) cortex, and are therefore blind, dramatically deny their blindness. They will bump into objects in the environment, but still not recognize that they cannot see. This is attributed to the Hetero-Modal cortex (also called the association cortex), two areas in the frontal cortex and posteriorly in the confluence of the temporal, occipital, and parietal lobes. These areas receive input from multiple unimodal sensory and motor areas and create an integrated and coherent model of the environment. In Anton’s syndrome, the hetero-modal cortex maintains this integrated model even though there is no visual input.

We ended our discussion here.

Here are the two Chinese pictograms described above:

看 = look The symbol for hand over the symbol for eye.

見 In its unsimplified form the upper part is a symbol of eye, while the lower part symbolises a man, thus forming the meaning "to see"

Respectfully,

Michael J. Solomon, MD